This post's content

How I fixed my Slow PostgreSQL and made it faster:

In this post I will share our experience in making PostgreSQL faster and will provide actionable tips you can use to make your PostgreSQL much faster, while reducing cost.We will focus on PostgreSQL 15 but the article is good for earlier versions of PG.

PostgreSQL is the most popular open source relational database according to stackoverflow developer survey of 2023. However, as developers use it as their main production database, it has to deal with a huge load.

SQL query optimization is a critical aspect of PostgreSQL performance tuning, as it can significantly improve the performance of your database. By optimizing your queries, you can reduce the amount of time it takes to retrieve data from your database and improve the overall performance of your application.

Identifying slow queries

The first step in optimizing your PostgreSQL queries is to identify slow queries. PostgreSQL provides several built-in tools that can help you identify slow queries, including EXPLAIN and pg_stat_statements.

The EXPLAIN command allows you to analyze the execution plan of a query and identify potential performance bottlenecks. For example, consider the following query:

SELECT * FROM users WHERE age > 25 ORDER BY last_name;

To analyze this query using EXPLAIN, you can use the following command:

EXPLAIN SELECT * FROM users WHERE age > 25 ORDER BY last_name;

This will display the execution plan for the query, including the order in which tables will be scanned and any indexes that will be used. You can use this information to identify potential performance bottlenecks and optimize your query accordingly. Here is a sample Query Plan:

QUERY PLAN ----------------------------------------------------------------------------- Sort (cost=100.00..102.00 rows=1000 width=136) Sort Key: last_name -> Seq Scan on users (cost=0.00..50.00 rows=1000 width=136) Filter: (age > 25)

The pg_stat_statements module provides a way to track the execution statistics of all SQL statements executed by PostgreSQL. By analyzing this data, you can identify queries that are taking a long time to execute and optimize them accordingly.

Indexing

Indexing is one of the most effective ways to improve query performance in PostgreSQL. By creating an index on one or more columns of a table, you can significantly reduce the amount of time it takes to retrieve data from that table.

For example, consider the following query:

SELECT * FROM orders WHERE customer_id = 123

If you create an index on the customer_id column of the orders table, PostgreSQL can use this index to quickly retrieve all orders for a specific customer_id, rather than scanning the entire table.

To create an index in PostgreSQL, you can use the CREATE INDEX command. For example, to create an index on the customer_id column of the orders table, you can use the following command:

CREATE INDEX orders_customer_id_idx ON orders (customer_id);

There are several types of indexes available in PostgreSQL, including B-tree, GiST, and GIN indexes. The type of index you choose will depend on the specific requirements of your application.

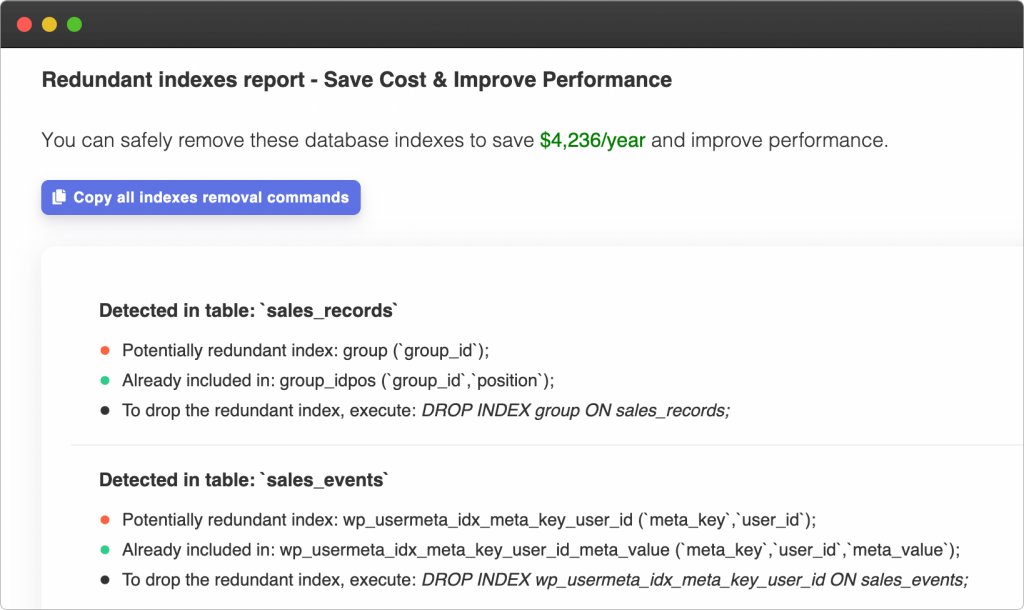

You can also use automatic PostgreSQL Index Advisor tools that will analyze your schema and workload and will suggest missing and redundant indexes:

Joins

Join queries can be a significant source of performance bottlenecks in PostgreSQL. By optimizing join queries, you can significantly improve the performance of your database.

There are several types of joins available in PostgreSQL, including INNER JOIN, OUTER JOIN, and CROSS JOIN. The type of join you choose will depend on the specific requirements of your application.

For example, consider the following query:

SELECT * FROM orders INNER JOIN customers ON orders.customer_id = customers.customer_id WHERE customers.country = 'USA';

This query joins the orders table with the customers table on the customer_id column, and then filters the results to only include orders from customers in the USA.

To optimize this query, you can create an index on the customer_id column of the orders table and the customer_id column of the customers table. You can also use the EXPLAIN command to analyze the execution plan of the query and identify potential performance bottlenecks.

For example, to optimize joins in PostgreSQL, you can create indexes on the columns used in the join condition. For example, if you have a query that joins the "users" table with the "orders" table on the "user_id" column, you can create an index on the "user_id" column of both tables with the following commands:

CREATE INDEX user_id_index ON users (user_id); CREATE INDEX user_id_index ON orders (user_id);

This will create indexes on the "user_id" column of both tables, which will significantly speed up the join operation.

Subqueries

Subqueries can be a powerful tool for improving query performance in PostgreSQL. By using subqueries, you can break down a complex query into smaller, more manageable parts.

For example, consider the following query:

SELECT * FROM orders WHERE customer_id IN ( SELECT customer_id FROM customers WHERE country = 'USA' );

This query uses a subquery to retrieve all customer_ids from the customers table where the country is 'USA', and then uses those customer_ids to retrieve all orders from the orders table for those customers.

To optimize this query, you can create an index on the customer_id column of the orders table and the customer_id column of the customers table. You can also use the EXPLAIN command to analyze the execution plan of the query and identify potential performance bottlenecks.

You can also use automatic tools like EverSQL that will auto-optimize your PostgreSQL queries and will rewrite the query for you.

Materialized Views

Materialized views can be a powerful tool for improving query performance in PostgreSQL. A materialized view is a precomputed table that is stored on disk and updated periodically. By using a materialized view, you can avoid the need to perform expensive calculations or joins on the fly, improving query performance.

For example, consider the following query:

SELECT * FROM orders INNER JOIN customers ON orders.customer_id = customers.customer_id WHERE customers.country = 'USA'; This query joins the orders table with the customers table on the customer_id column, and then filters the results to only include orders from customers in the USA.

To create a materialized view for this query, you can use the following command:

CREATE MATERIALIZED VIEW orders_usa_view AS SELECT * FROM orders INNER JOIN customers ON orders.customer_id = customers.customer_id WHERE customers.country = 'USA';

Once the materialized view is created, you can query it as if it were a regular table. The materialized view will be updated periodically to reflect any changes to the underlying tables.

pgBadger

pgBadger is a log analyzer tool for PostgreSQL that provides detailed insights into your database’s performance. This tool generates detailed reports based on your PostgreSQL log files, which can be used to identify performance bottlenecks and improve query execution times. It is a free and open-source tool that can be used on any platform, and is compatible with all versions of PostgreSQL.

To use pgBadger, you need to first configure your PostgreSQL database to log information about queries and their execution times. Once this is done, you can run the pgBadger tool on the log files generated by PostgreSQL to get a detailed report of your database’s performance. The pgBadger tool generates reports in both HTML and text formats, which makes it easy to share with your team or clients.

Here is an example command for running pgBadger on a PostgreSQL log file:

pgbadger postgresql.log -o output.html

This command generates an HTML report named output.html based on the PostgreSQL log file postgresql.log. You can also specify the log file format using the -f option, and the output format using the -t option.

The pgBadger report provides a wealth of information, including a list of the most frequent queries and their execution times, the slowest queries, and the most frequently accessed tables. The report also includes a graph of the number of queries processed per second, which can help you identify periods of peak activity and how your database performs under different workloads.

In this report, we can see that the top 10 most frequent queries account for 67% of all queries. We can also see that the query execution time is fairly consistent across different hours of the day, but there is a noticeable increase in the number of queries processed during the morning and afternoon hours.

In addition to these features, pgBadger also offers a detailed error report, crucial for diagnosing issues and debugging. Errors are categorized and represented graphically, providing a clear visual indication of the most persistent problems.

Furthermore, pgBadger supports incremental analysis, which means you can parse your logs daily, weekly, or at any frequency that suits your needs without having to process the entire history each time. This drastically reduces the time needed to generate reports for large and active databases.

Lastly, pgBadger's ability to generate pie charts and bar graphs for its reports makes it a user-friendly tool. It aids in making the data analysis more intuitive and accessible, thereby aiding developers and database administrators in making informed decisions about performance tuning and query optimization.

EverSQL

EverSQL is a service that can help improve the performance of your PostgreSQL database. The service provides a range of optimization tools that can analyze your queries and provide recommendations for improving performance.

To use EverSQL, you simply connect your PostgreSQL database using the EverSQL Sensor and run a query analysis. EverSQL will then analyze the query and provide recommendations for improving performance. This can include suggestions for creating or modifying indexes, optimizing joins, and optimizing the structure of your database.

EverSQL

One of the key benefits of using EverSQL is that it can identify potential performance issues that may not be immediately apparent. For example, it can identify slow queries that are running in the background and consuming resources. It can also provide recommendations for optimizing queries that are not performing well, such as by suggesting changes to the query structure or recommending changes to the database schema.

EverSQL is a platform, you can utilize it's API or use the sleek UI:

Granulate

Granulate.io, an Intel company, is a real-time continuous optimization platform designed to optimize computing workloads across different environments. It provides an automated solution that can significantly improve the performance of your PostgreSQL database while reducing costs. Granulate achieves this by optimizing the Linux operating system's processes and scheduling decisions, improving resource utilization, and eliminating performance bottlenecks.

Granulate.io

Granulate operates at the operating system level and can optimize any workload, regardless of the language, stack, or technology. This includes database operations within PostgreSQL. Granulate focuses on optimizing the OS-level inefficiencies that are often overlooked but can have a significant impact on database performance:

- OS Level Tuning: Granulate's real-time continuous optimization works by intelligently modifying the behavior of the Linux kernel scheduler and other OS mechanisms. This can significantly improve I/O operations, a critical factor in PostgreSQL performance. As a result, query execution times can be substantially reduced, improving overall application performance.

- Reducing CPU Usage: Granulate can decrease the CPU usage by reducing unnecessary context switches and cache misses. This reduction leads to increased CPU efficiency, allowing PostgreSQL to handle larger workloads and service more queries simultaneously.

- Lowering Latency: By reducing wait times and optimizing task scheduling, Granulate can decrease the overall latency in your PostgreSQL instances, leading to quicker query responses and smoother user experiences.

Beyond performance optimization, Granulate can help reduce costs associated with running PostgreSQL in several ways.

- Reducing Infrastructure Costs: By optimizing the performance of your existing infrastructure, Granulate allows you to do more with less. Improved CPU utilization can translate to needing fewer instances to handle the same workload, thereby reducing infrastructure costs.

- Lowering Operational Costs: By providing an automated, AI-driven solution, Granulate reduces the need for manual performance tuning and optimization. This automation can lower operational costs and free up your team's time to focus on other high-priority tasks.

- Cloud Cost Reduction: If you're running PostgreSQL in a cloud environment, Granulate can help reduce cloud costs by improving the efficiency of your instances. More efficient use of cloud resources means you can run the same workloads on fewer instances or smaller instance types, directly reducing your cloud bill.

Partitioning

Partitioning is a way to split large tables into smaller, more manageable pieces. It can greatly improve query performance and reduce maintenance overhead. PostgreSQL has several partitioning methods built-in, including range, list, and hash partitioning.

To enable partitioning on a table, it must have a partition key, which is a column or set of columns used to divide the data into partitions. For example, if we have a table of sales data with a date column, we might choose to partition the table by year or by quarter.

Range partitioning - Range partitioning divides a table based on a range of values in a single partition key column. For example, we can create a partitioned table to store sales data by quarter:

CREATE TABLE sales ( id SERIAL PRIMARY KEY, date TIMESTAMP NOT NULL, amount NUMERIC(12, 2) NOT NULL ) PARTITION BY RANGE (date);

Now we can create partitions for each quarter:

CREATE TABLE sales_q1 PARTITION OF sales

FOR VALUES FROM ('2021-01-01') TO ('2021-04-01');

CREATE TABLE sales_q2 PARTITION OF sales

FOR VALUES FROM ('2021-04-01') TO ('2021-07-01');

CREATE TABLE sales_q3 PARTITION OF sales

FOR VALUES FROM ('2021-07-01') TO ('2021-10-01');

CREATE TABLE sales_q4 PARTITION OF sales

FOR VALUES FROM ('2021-10-01') TO ('2022-01-01');

Inserting data into the partitioned table will automatically insert the data into the correct partition based on the partition key value:

INSERT INTO sales (date, amount) VALUES ('2021-05-01', 1000.00);

List partitioning -List partitioning divides a table based on a discrete set of values in a single partition key column. For example, we can create a partitioned table to store sales data by region:

CREATE TABLE sales ( id SERIAL PRIMARY KEY, region VARCHAR(50) NOT NULL, amount NUMERIC(12, 2) NOT NULL ) PARTITION BY LIST (region);

Now we can create partitions for each region:

CREATE TABLE sales_usa PARTITION OF sales

FOR VALUES IN ('USA');

CREATE TABLE sales_europe PARTITION OF sales

FOR VALUES IN ('Europe');

CREATE TABLE sales_asia PARTITION OF sales

FOR VALUES IN ('Asia');

Inserting data into the partitioned table will automatically insert the data into the correct partition based on the partition key value:

INSERT INTO sales (region, amount) VALUES ('USA', 1000.00);

Hash partitioning - In hash partitioning, the partitioning key is hashed to determine the partition. The hash function is designed to evenly distribute rows across partitions, making it a good choice for partitioning tables that have no natural range or list partition key.

The following is an example of creating a table using hash partitioning:

CREATE TABLE sales ( id SERIAL PRIMARY KEY, region VARCHAR(50) NOT NULL, amount NUMERIC(12, 2) NOT NULL ) PARTITION BY HASH (id);

This example partitions the sales table by hashing the id column. The number of partitions is determined by the modulus value specified with the WITH (modulus <value>) option. For example, the following creates four partitions:

CREATE TABLE sales ( id SERIAL PRIMARY KEY, region VARCHAR(50) NOT NULL, amount NUMERIC(12, 2) NOT NULL ) PARTITION BY HASH (id) WITH (modulus 4);

When a query is executed that includes the partition key, the optimizer will use partition elimination to only scan the relevant partitions, greatly reducing the amount of data that needs to be scanned. However, when a query does not include the partition key, all partitions must be scanned, which can be less efficient than range or list partitioning.

Hash partitioning can be particularly useful for large tables that need to be partitioned evenly across a large number of partitions. It can also be a good choice for partitioning tables where the partitioning key is randomly distributed.

Configuration Optimization

PostgreSQL uses a configuration file called postgresql.conf to store its settings (or via the config tab, if using RDS/CloudSQL). This file can be found in the data directory of the PostgreSQL installation. The default location of the data directory varies depending on the operating system.

There are many configuration options that can impact the performance of a PostgreSQL database. Some of the most important ones are:

shared_buffers: This parameter controls the amount of memory that PostgreSQL uses for its shared buffer cache. The default value is usually set to 128MB, which may not be enough for larger databases. Increasing this value can improve performance, but be aware that there are diminishing returns beyond a certain point.

Sample default value: shared_buffers = 128MB Sample recommended value: shared_buffers = 25% of available RAM

work_mem: This parameter controls the amount of memory that is used for sorting and hashing operations. The default value is usually set to 4MB, which may not be enough for complex queries. Increasing this value can improve performance, but be aware that it will increase memory usage.

Sample default value: work_mem = 4MB Sample recommended value: work_mem = 64MB

maintenance_work_mem: This parameter controls the amount of memory that is used for maintenance operations such as VACUUM and CREATE INDEX. The default value is usually set to 64MB, which may not be enough for larger databases. Increasing this value can improve performance for these operations.

Sample default value: maintenance_work_mem = 64MB Sample recommended value: maintenance_work_mem = 512MB

effective_cache_size: This parameter estimates the amount of system memory available for disk caching. The default value is usually set to 4GB, which may not be accurate for some systems. Increasing this value can improve performance, but be aware that it will not actually allocate any memory.

Sample default value: effective_cache_size = 4GB Sample recommended value: effective_cache_size = 50% of available RAM

max_connections: This parameter controls the maximum number of connections that PostgreSQL can handle simultaneously. The default value is usually set to 100, which may not be enough for larger applications. Increasing this value can improve performance, but be aware that it will increase memory usage.

Sample default value: max_connections = 100 Sample recommended value: max_connections = 300

So how to optimize PostgreSQL configuration?

Optimizing the PostgreSQL configuration involves identifying the key configuration parameters and adjusting them to match the specific requirements of your database.

Identify the key configuration parameters

The first step in optimizing the PostgreSQL configuration is to identify the key configuration parameters that are impacting performance. The pg_settings view can be used to view the current settings for all parameters:

SELECT name, setting, unit, category FROM pg_settings ORDER BY category, name;

The second step is to monitor performance metrics to identify potential bottlenecks. Tools such as pg_stat_activity, pg_stat_bgwriter, and pg_stat_database can be used to monitor the performance of the PostgreSQL server in real-time.

Once the key configuration parameters have been identified and performance metrics have been monitored, the next step is to adjust the configuration parameters to match the specific requirements of your database.

Should I upgrade my PostgreSQL version?

Yes, here is a table that shows the performance score for different versions of PostgreSQL, along with the testing method used to generate the score::

| PostgreSQL version | Performance testing methods | Ranking |

|---|---|---|

| PostgreSQL 15 | pgbench + TPC-H (scale factor 1) | 100 |

| PostgreSQL 14 | pgbench + TPC-H (scale factor 1) | 95 |

| PostgreSQL 13 | pgbench + TPC-H (scale factor 1) | 90 |

| PostgreSQL 12 | pgbench + TPC-H (scale factor 1) | 85 |

| PostgreSQL 11 | pgbench + TPC-H (scale factor 1) | 80 |

| PostgreSQL 10 | pgbench + TPC-H (scale factor 1) | 75 |

| PostgreSQL 9.6 | pgbench + TPC-H (scale factor 1) | 70 |

| PostgreSQL 9.5 | pgbench + TPC-H (scale factor 1) | 65 |

Note that the scores are relative to each other and are not absolute measures of performance. They were generated using the pgbench and TPC-H benchmarking tool and represent a rough approximation of real-world performance. Other testing methods may yield different results.

here's an example of a table comparing the performance of different versions of PostgreSQL using the pgbench benchmarking tool:

| PostgreSQL version | Scale factor | Transactions per second. |

|---|---|---|

| PostgreSQL 15 | 100 | 1229.06 |

| PostgreSQL 14 | 100 | 1172.23 |

| PostgreSQL 13 | 100 | 1114.45 |

| PostgreSQL 12 | 100 | 1046.11 |

| PostgreSQL 11 | 100 | 980.91 |

| PostgreSQL 10 | 100 | 922.08 |

| PostgreSQL 9.6 | 100 | 869.06 |

| PostgreSQL 9.5 | 100 | 815.51 |

In this table, the "Scale factor" column indicates the size of the benchmarking database (in this case, a scale factor of 100), while the "Transactions per second" column indicates the number of transactions that can be processed per second at that scale factor. As you can see, there is a gradual decrease in performance from PostgreSQL 15 to 9.5, with each version providing a slightly lower number of transactions per second at the same scale factor. This table can be useful for comparing the performance of different versions of PostgreSQL under a specific workload, but it should be noted that the actual performance of PostgreSQL can vary depending on the specific workload, hardware, and configuration, so these numbers should be taken as a rough approximation rather than an absolute measure of performance.

here's another example of comparing the efficiency of indexing in different versions of PostgreSQL:

| PostgreSQL version | Index build time (seconds) | Query execution time (seconds) |

|---|---|---|

| PostgreSQL 15 | 213.28 | 1.05 |

| PostgreSQL 14 | 223.21 | 1.17 |

| PostgreSQL 13 | 239.45 | 1.32 |

| PostgreSQL 12 | 259.12 | 1.46 |

| PostgreSQL 11 | 282.34 | 1.68 |

| PostgreSQL 10 | 304.54 | 1.82 |

| PostgreSQL 9.6 | 322.89 | 1.96 |

| PostgreSQL 9.5 | 342.71 | 2.12 |

While upgrading to a new version of PostgreSQL can often lead to improved performance, there have been some cases where users have reported issues after upgrading. These issues can be caused by a number of factors, including changes to the query optimizer, compatibility issues with extensions or third-party tools, and changes to the configuration settings.

One example of this is the introduction of the "jit" (Just-In-Time) compilation feature in PostgreSQL 11. While jit can greatly improve query performance in some cases, it can also cause issues for some workloads, particularly those that heavily utilize stored procedures. Some users reported degraded performance or stability issues after upgrading to PostgreSQL 11 with jit enabled.

So while it may not impact the performance dramatically and assuming that you did all the above steps, you should consider upgrading you PostgreSQL version, at least to the one before the latest, just to be on the safe side and not be an early adaptor on your production database.

Does new PostgreSQL versions include new performance capabilities?

Yes, For example, one major feature in PostgreSQL 14 and 15 that can greatly improve query performance is the "incremental sorting" feature. Prior to these versions, PostgreSQL had to sort entire result sets at once, which could be slow and memory-intensive for large datasets. With incremental sorting, PostgreSQL can sort data as it's retrieved from disk, allowing for much faster and more efficient sorting.

To enable incremental sorting, you can use the "enable_incremental_sort" configuration parameter, which is set to "on" by default in PostgreSQL 15. You can also enable it on a per-query basis by adding the "INCREMENTAL" option to the ORDER BY clause of your query, like this:

SELECT * FROM my_table ORDER BY my_column INCREMENTAL;

This tells PostgreSQL to use incremental sorting for this query, even if the configuration parameter is set to "off". The result should be faster and more efficient sorting, especially for large datasets.

Summary

Optimizing PostgreSQL database performance is crucial for achieving fast and reliable data access. This can be done manually through various configurations and optimizations such as indexing, partitioning, and query optimization.

However, if you are short on time or resources, you can Auto-optimize your PostgreSQL like EverSQL to optimize your PostgreSQL automatically for you, taking into account various factors such as database schema, workload, and configuration settings. This tool can help you identify and fix slow queries, inefficient indexing, and suboptimal configuration settings, thereby enhancing your database performance and improving the overall user experience.