This post's content

- COUNT(*) vs COUNT(field) vs COUNT(DISTINCT field)

- How to speed up COUNT(DISTINCT)

- How to programmatically approach a slow COUNT(DISTINCT) query

- Conclusion

While counting is common in every application, sometimes it is not enough: when we need to display the amount of unique users generating traffic in our website or provide the number of disparate items included in an order we need to apply a distinct count.

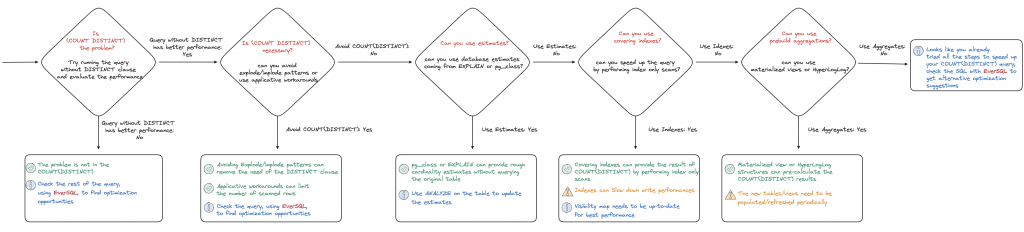

Compared to a classical count, the distinct one is usually slower since it needs to perform two actions: list the unique field values and then count them. This blog showcases several different methods to speed up COUNT(DISTINCT) SQL queries and provides a programmatic approach to delve into and solve the problem. A more detailed image of the workflow is available towards the end of the blog.

Note: While the blog post shows examples for PostgreSQL®, most of the techniques and similar solutions might exist for other databases.

Note: While the blog post shows examples for PostgreSQL®, most of the techniques and similar solutions might exist for other databases.

COUNT(*) vs COUNT(field) vs COUNT(DISTINCT field)

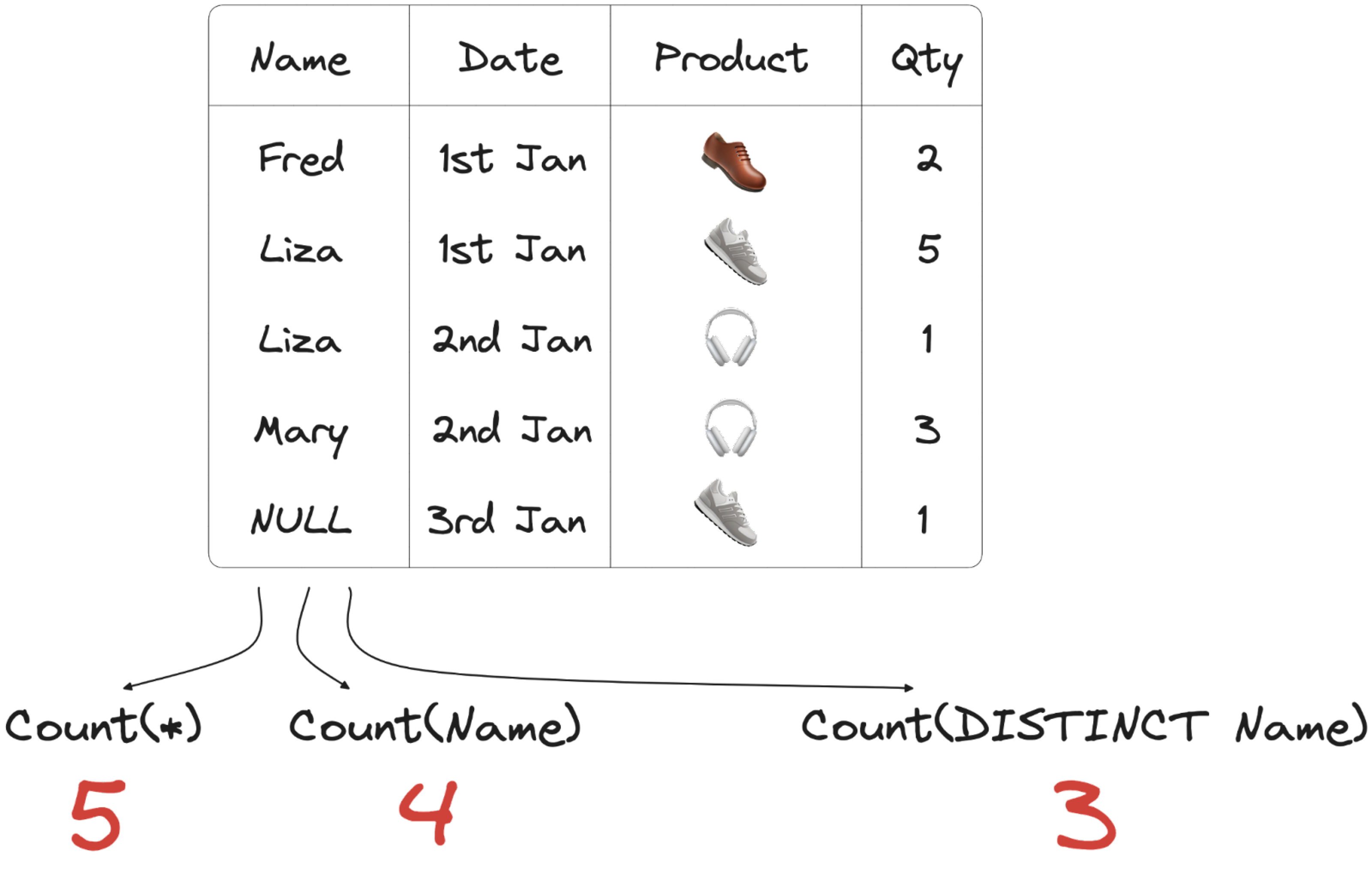

First, the theory: what exactly are COUNT and COUNT(DISTINCT) counting? The reply depends on what we are including in the COUNT statement.

COUNT(*)

The COUNT(*) function returns the number of rows retrieved by the SELECT statement. In the image example, if we issue:

SELECT COUNT(*)

FROM EXAMPLE_TBL

The query will return 5, the number of rows in our dataset without filtering. If we apply a WHERE condition like:

SELECT COUNT(*)

FROM EXAMPLE_TBL

WHERE Product='👟';

The result will be 2, again like the number of rows including 👟 in the purchase.

COUNT(field)

One would expect that COUNT(field) would work the same. However this is not the truth since it counts the number of NOT NULL rows for the specific field (or set of fields or expression) within the parenthesis. If, for example, we issue:

SELECT COUNT(Name)

FROM EXAMPLE_TBL;

The result will be 4, since the last row has a NULL value for the Name field. The same applies also if we are adding a WHERE clause, for example:

SELECT COUNT(Name)

FROM EXAMPLE_TBL

WHERE Product='👟';

Would result in 1 since the last row has the Name field equal to NULL.

COUNT(DISTINCT field)

Finally, the COUNT(DISTINCT field) which counts the number of different, non null, field values (or multiple fields or expressions) listed within the parentheses. If we issue the following statement:

SELECT COUNT(DISTINCT Name)

FROM EXAMPLE_TBL;

The result will be 3, since there are 3 different NOT NULL Names (Fred, Liza, and Mary).

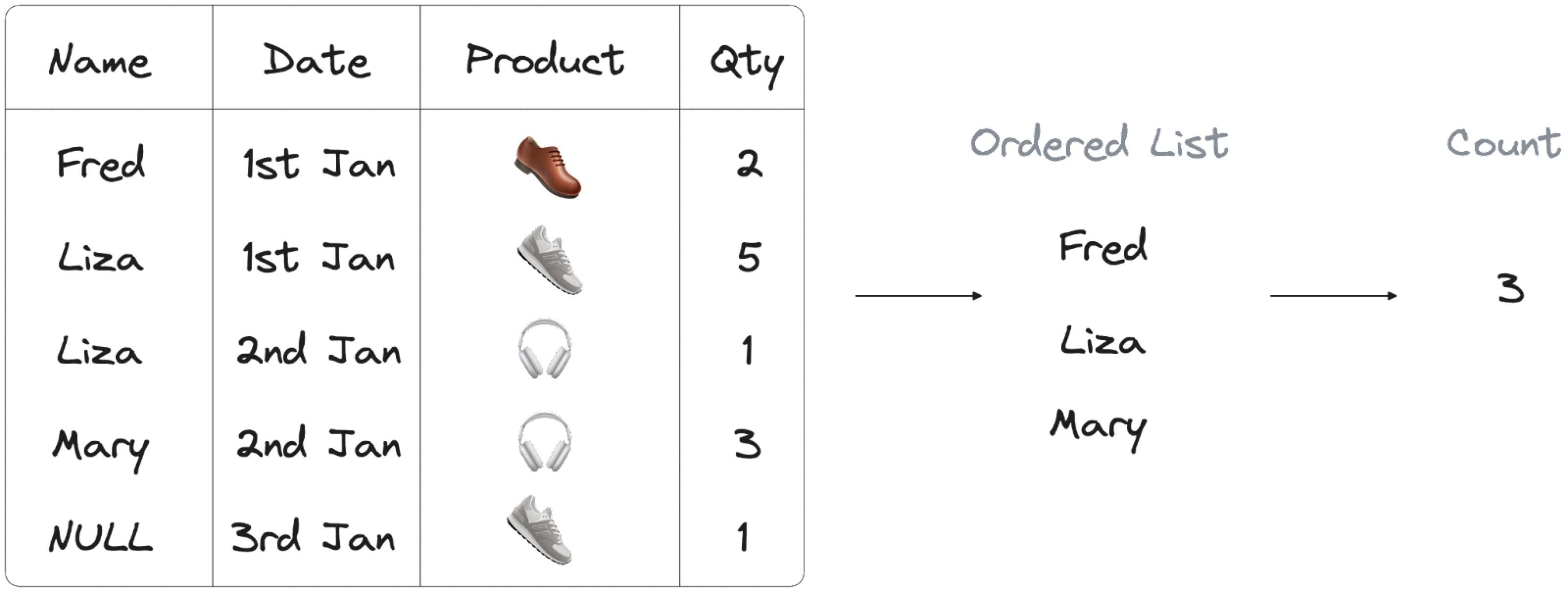

As mentioned above, COUNT(DISTINCT) is a two step process: first the database needs to retrieve the list of unique Names, and then count it. The implementation specifics might change across databases but usually the first step is done by creating an ordered list of the field(s) included in the COUNT(DISTINCT) and then counting the results.

How to speed up COUNT(DISTINCT)

Once we understand the basics, we can go back to the main topic of the blog: how to speed up our COUNT(DISTINCT)? There are at least 7 (+1) ways to do so, depending on your needs. We are going to use a PostgreSQL database for our test, but most of the solutions below will work on any database. We are going to use Aiven for PostgreSQL to recreate the example and delve into the options.

Setup a FREE PostgreSQL database

For our example, we’ll use a FREE PostgreSQL database on Aiven, that we can spin up with the following procedure:

- Sign up for a new account in the Aiven Console

- Select Create service

- Select PostgreSQL

- Select a Cloud Provider and region (The list of regions offering a FREE PostgreSQL is listed in the documentation)

- Select the service Plan

- Provide the service Name

- Click on Create service

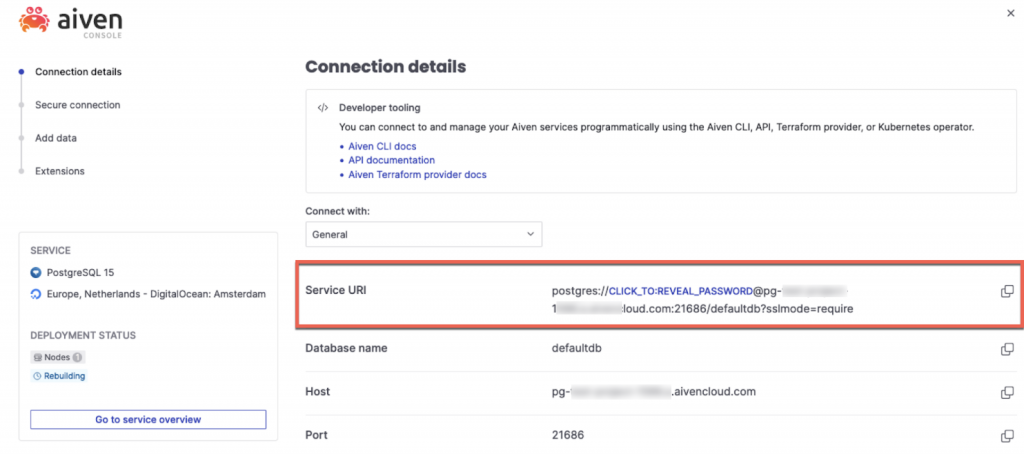

The above process will start the creation of a new Aiven for PostgreSQL service. Once the service is in RUNNING state, you can connect to it using psql and the connection string shown in the Service URI section.

We can recreate the example shown in the images above with the following SQL:

create table EXAMPLE_TBL (

Id serial primary key,

Username text,

Purchase_Date Date,

Product text,

Qty int

);

Insert into EXAMPLE_TBL (Username, Purchase_Date, Product, Qty)

VALUES

('Fred', '2024-01-01', '👞', 2),

('Liza', '2024-01-01', '👟', 5),

('Liza', '2024-01-02', '🎧', 1),

('Mary', '2024-01-02', '🎧', 3),

(NULL , '2024-01-03', '👟', 1);

With the dataset loaded in PostgreSQL, it’s time to check our options to speed up the COUNT(DISTINCT) queries.

Speed up COUNT(DISTINCT) using database estimates

Sometimes we don’t need full precision in the number we are reporting, what’s the difference between 1.568.121 users and 1.569.200 users if we are showing 1.5mil users anyway? In such cases we can make use of the database cardinality estimates.

The benefit of using estimates is that we can rely on querying database infrastructure tables containing a recent approximate cardinality instead of having to scan the real table.

Approximate count using the database cardinality estimate tables

The database cardinality estimates are rough counts of the number of rows and distinct values available in the tables that are contained in the database.

These counts are useful for the database engine to determine which is the most effective plan to implement a specific SQL query: should it scan the entire table or use an index? Should it perform a hash join or a nested loop? All these questions can be answered properly with a good grasp of the data volumes included in the query, that the database engine keeps in a dedicated set of tables.

In PostgreSQL we can query pg_class which contains the most recent estimate for a specific table. In our example, if we execute:

SELECT reltuples AS estimate

FROM pg_class

WHERE relname = 'example_tbl';

The estimate will show 0 rows. Weird right? This is due to the fact that we didn’t run ANALYZE after loading the table, therefore the database doesn’t have up to date statistics about the EXAMPLE_TBL. If we run:

ANALYZE EXAMPLE_TBL;And then rerun the same SELECT statement we defined above, we’ll have the updated 5 count. We can use the pg_class table to get an estimate of the COUNT(*) on a specific query, but we need to be aware that the number is only updated by VACUUM, ANALYZE and other DDL commands like CREATE INDEX.

Note: A similar view is available for MySQL as well in the STATISTICS table within the INFORMATION_SCHEMA.

Approximate count using the result of EXPLAIN

The above solution is pretty static and works on the assumption we are counting (and not counting distinct) a single table column without specific WHERE conditions applied. PostgreSQL wiki suggests a better estimate, especially for complex queries, by parsing the result of the EXPLAIN command. The EXPLAIN command provides back the current database cardinality estimation and plan for the query and takes into consideration WHERE conditions.

We can create a function that parses the result of the EXPLAIN call like the following:

CREATE OR REPLACE FUNCTION count_estimate(

query text

) RETURNS integer LANGUAGE plpgsql AS $$

DECLARE

plan jsonb;

BEGIN

EXECUTE 'EXPLAIN (FORMAT JSON)' || query INTO plan;

RETURN plan->0->'Plan'->'Plan Rows';

END;

$$;

And then get the database estimate for the specific query with:

SELECT count_estimate('SELECT DISTINCT username FROM example_tbl WHERE Qty >= 2');The result of the EXPLAIN is the following:

QUERY PLAN

-----------------------------------------------------------------------

Unique (cost=1.07..1.08 rows=2 width=5)

-> Sort (cost=1.07..1.08 rows=2 width=5)

Sort Key: username

-> Seq Scan on example_tbl (cost=0.00..1.06 rows=2 width=5)

Filter: (qty >= 2)

(5 rows)

And the function is able to correctly retrieve the expected cardinality of 2. Please note again that the result is an estimate, the correct result would be 3 (Fred, Liza and Mary).

Speed up COUNT(DISTINCT) using aggregations

The two solutions defined above are useful only if we want to understand the raw estimate from the database point of view. What if we need a precise number at a precise point in time? If the timing is known upfront (as example, every day at 8AM), we could pre-calculate the counts in dedicated structures.

This option could speed up COUNT(DISTINCT) queries in read-intensive environments, with rare updates/writes, since the overhead to update the aggregated data will be minimal due to the scarcity of writes. If for example we partition our order tables per day, we could perform the aggregated count in all the previous day's partitions since they will not have any updates/deletes happening.

Before analyzing the aggregates, let’s populate the EXAMPLE_TBL with a bit more data using the following SQL:

INSERT INTO EXAMPLE_TBL (Username, Purchase_Date, Product, Qty)

SELECT 'User' || floor(random() * 200 + 1)::int,

CURRENT_DATE - floor(random() * 5 + 1)::int,

CASE floor(random() * 3 + 1)::int

WHEN 1 then '👞'

WHEN 2 then '👟'

WHEN 3 then '🎧'

END,

floor(random() * 10 + 1)::int

FROM generate_series(1,1000000);

Once run, the above SQL generates 1.000.000 rows in the EXAMPLE_TBL.

Aggregating via materialized views

Most databases including PostgreSQL have the concept of a Materialized View: a way to store the result of a select statement in a dedicated table and have the database automatically use this table for matching queries.

If, for example, we create a materialized view over our table, calculating the different usernames for each product with:

CREATE MATERIALIZED VIEW EXAMPLE_MW AS

SELECT PRODUCT,

COUNT(DISTINCT USERNAME)

FROM EXAMPLE_TBL

GROUP BY PRODUCT;

We can see in the database a new materialized view, called example_mw, by querying the pg_matviews system view:

SELECT * FROM pg_matviews WHERE matviewname = 'example_mw';Keep in mind that the difference between a normal view and a materialized one is that the results of the latter are actually stored in the database. If now we issue a query like:

SELECT PRODUCT,

COUNT(DISTINCT USERNAME)

FROM EXAMPLE_TBL

GROUP BY PRODUCT;

The above query takes approximately 6346.786 ms, while if we run a similar query over the materialized view:

SELECT * FROM example_mw;

It returns in 16.683 ms! However, we need to remember that materialized views are physicalized on creation and not automatically updated, therefore to have accurate results, we need to refresh the materialized view with:

REFRESH MATERIALIZED VIEW example_mw;Which, in the example, took 6490.287 ms.

As mentioned before, using a materialized view can be a good solution in cases when the underlying table doesn’t have frequent writes. Calculating the materialized view once and querying it continuously speeds up the performance.

Aggregating via dedicated data structures: hyperloglog

Citus postgresql-hll introduces a new data type, called hll, which is a HyperLogLog data structure, a fixed-size, set-like structure used for distinct value counting with tunable precision. The beauty of the HyperLogLog table is that we can feed in the data structure once at a detailed granularity (as example at Purchase_Date) and query it at a more aggregate level (e.g. at Purchase Month) relying on the ability of the hll structure to estimate the aggregations.

In our example we first need to enable the extension:

CREATE EXTENSION hll;Then we can create a dedicated table with:

CREATE TABLE daily_users (

Purchase_Date date UNIQUE,

users hll

);

And feed it with:

INSERT INTO daily_users

SELECT Purchase_Date, hll_add_agg(hll_hash_text(Username))

FROM EXAMPLE_TBL

GROUP BY 1;With the hll table loaded (in 614.442 ms) we can now query it to retrieve the distinct number of users for a specific date:

SELECT Purchase_Date,

hll_cardinality(users)

FROM daily_users;Returning the result set in 18.357 ms.

As mentioned above, the beauty of hll is that it can approximate the aggregations, so if, for example we want to understand the number of distinct users in the last two days, we can query the table and have it aggregate the results with:

SELECT hll_cardinality(hll_union_agg(users))

FROM daily_users

WHERE Purchase_Date >= CURRENT_DATE -2;Even if we store the data at daily level, the hhl data structure is able to provide an estimation of the aggregation over multiple days.

Where hhl gets even more interesting is the ability to perform set computation across the dataset. If, for example, we want to understand which people purchased yesterday but not today we can do it with the following query:

SELECT Purchase_Date, (#hll_union_agg(users) OVER two_days) - #users AS lost_uniques

FROM daily_users

WINDOW two_days AS (ORDER BY Purchase_Date ASC ROWS 1 PRECEDING);The above query:

- Generates a window (

WINDOW two_days) of two days containing the current and previous date (ORDER BY Purchase_Date ASC ROWS 1 PRECEDING) - Calculates the overall aggregate number of users for the two days (

#hll_union_agg(users)) and subtracts the number of users of the second day.

Providing the results in just 157.709 ms. To achieve the same result we could write a query full of self-joins and count distinct with far worse performances and impact on the database.

Speed up COUNT(DISTINCT) using covering indexes

The aggregated methods defined above (materialized views and dedicated tables with hyperloglog data structures) provide a performance advantage at the cost of keeping the aggregation up to date.

An alternative that doesn’t require periodic refreshes is to speed up the COUNT(DISTINCT) by creating covering indexes. Covering indexes are specifically designed to include the columns needed by a particular type of query that you run frequently. Once these indexes are available, the database can perform an index only scan, and therefore parse only the index data instead of needing to query the original table.

In our case, if we are looking to speed up the count of distinct users per day with a query like:

SELECT PURCHASE_DATE, COUNT(DISTINCT USERNAME)

FROM EXAMPLE_TBL

GROUP BY PURCHASE_DATE;we could define an index like:

CREATE INDEX EXAMPLE_COVERING_IDX ON EXAMPLE_TBL(Purchase_date) INCLUDE(Username);If we now execute the EXPLAIN for the query running the aggregation over the days like:

EXPLAIN select Purchase_date, count(distinct Username) from EXAMPLE_TBL group by Purchase_date;We can see that the database is performing an index only scan instead of querying the entire table.

QUERY PLAN

--------------------------------------------------------------------------------------------------------------

GroupAggregate (cost=0.42..23853.59 rows=6 width=12)

Group Key: purchase_date

-> Index Only Scan using example_covering_idx on example_tbl (cost=0.42..18853.50 rows=1000005 width=11)

(3 rows)The index only scan performs the query in 1783.086 ms (two times faster than scanning the entire table). The database, to be able to perform an index only scan, needs to have an updated Visibility Map dictating which rows (tuples is the correct naming) are visible to a certain transaction. An out-of-date visibility map could mean more lookups in the table pages to determine if a certain tuple should be visible to the running query, and, therefore a decrease in performance. The vacuum process takes care of that maintenance, either when run through AUTOVACUUM or manually.

Note that adding indexes will likely slow down any write on the table since it will also need to update the index. Moreover, indexes are physical data structures in the database, they occupy space, and need periodic maintenance to keep their performance.

Reviewing EverSQL optimiser suggestions

Using EverSQL Optimiser on the original COUNT(DISTINCT) query provides the following suggestions:

- The creation of an index on the

PURCHASE_DATEfield by default

CREATE INDEX example_tbl_idx_purchase_date ON "EXAMPLE_TBL" ("PURCHASE_DATE");- The creation of a covering index, similar to the one mentioned above when the option “Generate another alternative with Covering Indexes (larger, but can be faster)” is selected

CREATE INDEX example_tbl_idx_purchase_date_username ON "EXAMPLE_TBL" ("PURCHASE_DATE","USERNAME");Speed up COUNT(DISTINCT) by re-evaluating the requirements

The set of suggestions provided above are based on the assumption that COUNT(DISTINCT) is necessary to provide the correct results. But there are scenarios where the COUNT(DISTINCT) operation can be reduced in scope or omitted by either re-evaluating the query or the driving requirement. The following section explores such scenarios and provides alternative solutions.

Speed up COUNT(DISTINCT) by avoiding explode/implode patterns with DISTINCT/GROUP BY

We run COUNT(DISTINCT) to count the unique values for a specific column in our tables. However there are cases in which the DISTINCT clause can be omitted altogether, making the query significantly faster.

Let’s modify our earlier example by separating the information into USERS and PURCHASES tables and adding a TAX_ID column defining the user’s tax identification code that is unique per user but that could be NULL. The following script creates the two tables and populates them with data.

CREATE TABLE USERS (

ID SERIAL PRIMARY KEY,

USERNAME TEXT,

TAX_ID TEXT UNIQUE);

CREATE TABLE PURCHASES (

ID SERIAL PRIMARY KEY,

USER_ID INT,

PURCHASE_DATE DATE,

PRODUCT TEXT,

QTY INT,

CONSTRAINT fk_user

FOREIGN KEY(user_id)

REFERENCES users(id)

);

INSERT INTO USERS (USERNAME, TAX_ID)

SELECT 'USER' || GENERATE_SERIES,

case when floor(random() * 10 + 1)::int > 8 then NULL else 'TAX_ID' || GENERATE_SERIES end

FROM GENERATE_SERIES(1, 20000);

INSERT INTO PURCHASES(USER_ID, PURCHASE_DATE, PRODUCT, QTY)

SELECT floor(random() * 20000 + 1)::int,

CURRENT_DATE - floor(random() * 5 + 1)::int,

CASE floor(random() * 3 + 1)::int

WHEN 1 then '👞'

WHEN 2 then '👟'

WHEN 3 then '🎧'

END,

floor(random() * 10 + 1)::int

FROM generate_series(1,10000000);

To get the number of distinct users who made a purchase yesterday and have a TAX_ID filled we might write the following query:

SELECT COUNT(DISTINCT USERS.TAX_ID)

FROM USERS JOIN PURCHASES ON USERS.ID = PURCHASES.USER_ID

WHERE PURCHASES.PURCHASE_DATE = CURRENT_DATE -1;

The query takes 8287.605 ms to produce the results. In the above SQL, we can notice that we are performing the COUNT(DISTINCT) because the same users can purchase multiple items during one day and therefore we need to first get the list of TAX_ID. (the TAX_ID is unique therefore is equivalent to listing the number of users) and then count the number of items in the list.

However, we could eliminate the need of the DISTINCT by only performing the count over the USERS table. To do so, we need to avoid the join with the table PURCHASES, but since it’s done only to check the existence of a specific purchase on the date, we could replace the join with an EXISTS subquery:

SELECT COUNT(USERS.TAX_ID)

FROM USERS

WHERE EXISTS(

SELECT *

FROM PURCHASES

WHERE

USER_ID=USERS.ID

AND PURCHASES.PURCHASE_DATE = CURRENT_DATE -1

);Which runs in 1658.140 ms, roughly ¼ of the time needed for the original COUNT(DISTINCT) query. If you want to understand more how EXISTS works, check the blog post on 5 ways to implement NOT EXISTS in PostgreSQL.

Speed up COUNT(DISTINCT) with applicative workarounds

In some use cases, the COUNT(DISTINCT) is performed to visualize the total amount of expected results alongside the details of the first set of detailed rows. For instance the total number of distinct users while showing the first 100 user details in a table view.

However sometimes showing the correct amount is unnecessary since it’s beyond the scope of perception for most users. For example, showcasing to the end user that there are 50.000 or 150.000 results doesn’t really provide much additional value if they are working on a list presenting the first 50 of them.

In such cases it could be useful to add a LIMIT clause filtering the original dataset, in order to provide a raw estimate of the result on a subset of the data. For instance, in our case we could execute the following:

SELECT COUNT(DISTINCT Username)

FROM (SELECT Username from EXAMPLE_TBL ORDER BY ID LIMIT 10000) SUB;

The above query counts the number of distinct users within the first 10000 rows and will return a number that is <=10000. If our need is to showcase a vague indicator of the total number of users, we could either showcase the result of the query or, if it’s close to the 10000 boundary, show 10000+ Users.

Is it really a COUNT(DISTINCT) problem?

The last piece of advice is to double check the assumptions: are we sure it’s really a problem with the COUNT(DISTINCT) part of the query? To validate the assumption we can execute the same query without the DISTINCT clause and check the performance. If we don’t notice any performance improvement we need to dig into other parts of the query that could cause a slow response.

How to programmatically approach a slow COUNT(DISTINCT) query

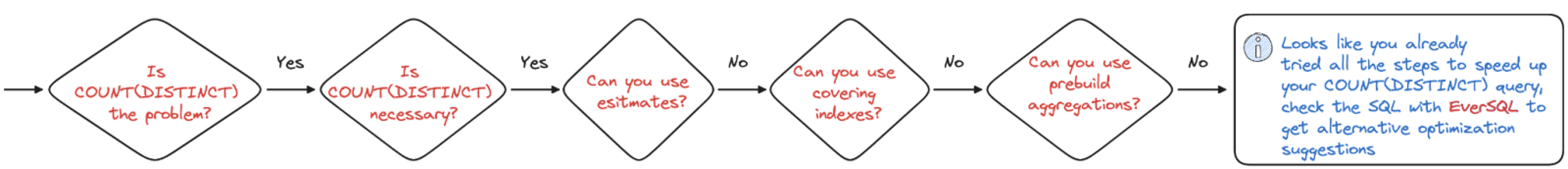

The following graph provides a programmatic approach to troubleshooting and speed up COUNT(DISTINCT) queries making various methods listed above more effective. You can click on the image to check it in full resolution.

The above workflow defines the following steps:

- The first check is to validate that the

COUNT(DISTINCT)is effectively the bottleneck by evaluating the query performance when theDISTINCTclause is removed. If there’s no evident performance gain, this might be a sign that the problem is elsewhere in the query. Try pasting your SQL query into EverSQL optimizer to receive SQL rewrite suggestions that could speed up your performance. - The second step evaluates the actual need of performing the

COUNT(DISTINCT)by evaluating alternatives like using application workarounds or avoiding explode/implode patterns that could enable the usage of a fasterCOUNT() - The third step evaluates the usage of database cardinality estimates, in cases where we don't need an exact number but a close approximation is sufficient. Such cases could be handled by querying the

pg_classview or parsing the output of theEXPLAINcall. - If we need a more precise number, covering indexes can speed up query time by performing index only scans. The drawback of using indexes is that we’ll pay a small penalty on writes.

- An alternative, specifically valid for writing scarce workloads, is the usage of materialized views or HyperLogLog data structures. These auxiliary data structures allow to pre-compute the calculation. The only drawback is that it’s up to the human to schedule a refresh of the data.

Conclusion

We use COUNT(DISTINCT) queries in applications to display insight about the different set of items at work. These queries usually provide sub-optimal performance since it’s a two step calculation (list distinct values and count) that can’t be pre-aggregated and rolled up.

But we can improve the COUNT(DISTINCT) performance: with the set of methods defined above, the workflow defined in the last section and the SQL optimization suggestions from EverSQL, we have several knobs available to speed up the COUNT(DISTINCT) SQL queries. Moreover, while exploring how to optimize a query, we learn about a wide set of features and functionalities available in the database. Understand how they can be used to speed up a workload what we should pay attention to when adopting them.